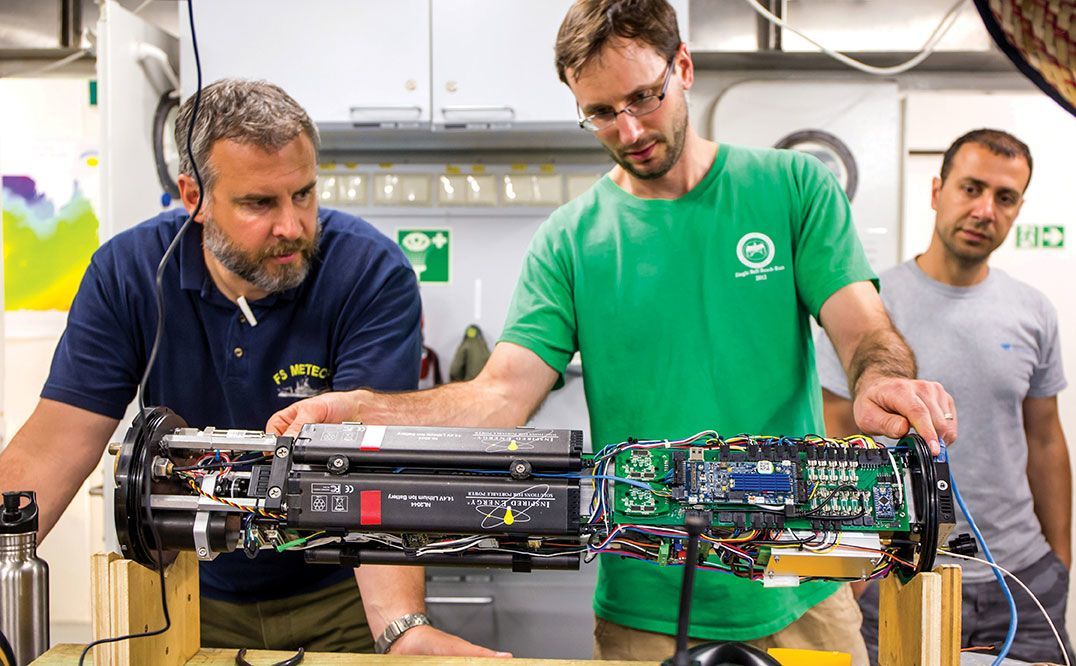

The Coordinated Robotics expedition took place in the remote area of Scott Reef, in the Timor Sea between Australia and Indonesia. Chief Scientist Oscar Pizarro from the Australian Centre for Field Robotics, University of Sydney, in collaboration with an international team of acclaimed robotics researchers and engineers, developed and tested advanced methodologies for autonomous robotic ocean surveys using a wide array of simultaneously deployed coordinating marine robots including autonomous underwater vehicles (AUVs), gliders, Lagrangian floats, and autonomous surface vessels (ASVs). This project demonstrated the latest advancements in oceanographic robotics, bringing engineers closer to being able to deploy large groups of robotic vehicles to autonomously carry out sophisticated oceanographic surveys over long periods of time.

A total of six robotic platforms were deployed from Falkor, sometimes operating simultaneously, collecting data and exchanging information in near real time. The AUVs performed tasks such as taking photographs and collecting water measurements at various depths. The float drifted with ocean currents and adjusted its distance to the bottom while collecting seafloor imagery and other parameters such as temperature or salinity, and the glider collected water column data and bathymetry as it moved up and down through the water. The ASV offered an additional way to track and relay messages to the underwater vehicles. The team developed a visualization tool for tracking the multiple vehicles so that anyone could see what was happening in real time. This allowed the floats to “fly” within just tens of meters from the reef surface, whereas, without such additional stream of information, gliders would not normally be able to operate.

Additionally, the science team was able to use bathymetric maps collected using Falkor’s multibeam echosounders to determine what areas were not well understood given existing imagery in other parts of the lagoon. Using the bathymetry in conjunction with the imagery, the scientists were able to identify sites that were of most value in improving habitat models and target them for surveys with the AUVs on board. Falkor’s high-performance computing system as well as computers on land through Falkor’s satellite link were used to correlate the ship’s multibeam sonar data with the AUV benthic imagery, and then propagated the visual features to the seafloor areas that were mapped acoustically.

In two weeks, the science team was able to collect a huge amount of data, including approximately 400,000 images – about a terabyte of raw data. Some of the images were collected from locations previously visited in 2009 and 2011, and will be added to a multi-year image library, providing valuable long-term monitoring. The imagery collected by these AUVs is also utilized in other ways to support reef monitoring research. Many of the photos were taken with a stereoscopic camera in a very precisely navigated grid. Stereo imaging allows scene depth calculation, which can be used to create 3D maps. Some of this imagery and its corresponding maps have been gathered into SeafloorExplore, an iOS app that visualizes 3D models of the seafloor. It provides users with a view from above the reef, and a controlled “flight” through the growth of coral. Besides being a useful research tool that allows the user to observe the reef and associated spatial patterns at multiple scales, this interactive data set is an amazing experience for casual viewers and ocean lovers.

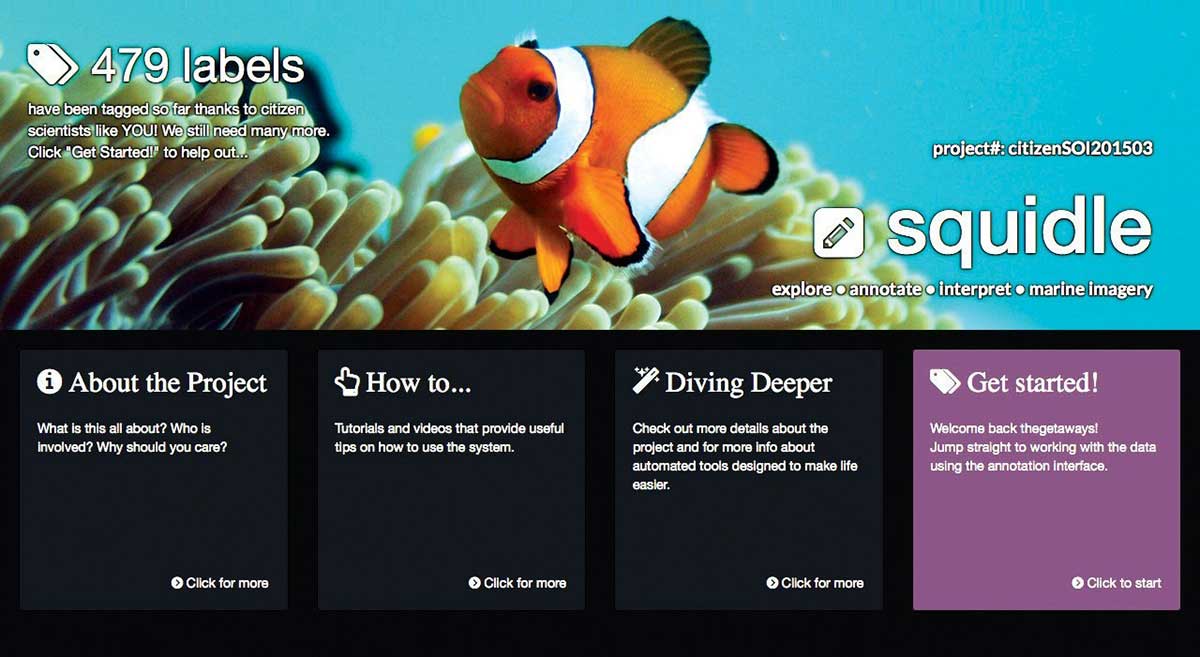

The science team also programmed computers to assist using algorithms so that they were able to translate the massive amount of images into quantitative information. Australian Centre for Field Robotics postdoctoral research engineer Ariell Friedman developed a citizen science website called Squidle using the images collected from the AUVs.

We hope that this can be a fun and educational tool that allows students to engage in real science.

— Ariell Friedman

The Squidle site allowed participants to label over 22,569 images of coral reefs and ocean floor. Using the public to tag these images helped to provide more examples to the computer algorithms, increasing the computer’s accuracy. Essentially, the team harnessed the power of the public to improve image tagging accuracy that will eventually be completed by computers. “We hope that this can be a fun and educational tool that allows students to engage in real science”, said Ariell Friedman. Once they have a large number of labels, the scientists will conduct rigorous analysis to validate the data for training machine learning algorithms.